语雀云端写作实现了 Markdown 格式的博文与博客构建目录的分离,这样的话,我想同时使用多少个主题都是没有问题的了,更重要的事,可以完全实现静态博客在线编辑。

本文对于文件(代码)变动的描述方式为-指删掉该行,+指增加改行(如果你是直接复制此行,请注意删掉+和上下文对齐),...表示省略上下文。另外,本文对于文件(代码)变动的描述只针对当时的文件(代码)版本,请根据实际情况决定对自己的相应文件(代码)进行变动。

博文迁移

首先是注册语雀账号,登陆上后,新建知识库,名称就为个人博客,再点击右上方的新建,选择导入,选择 Markdown 格式,将本地博文(即 _post 下的 Markdown 文件)批量(按住 Ctrl 可多选)导入至知识库个人博客。

导入成功后会发现每篇博文的开头,即 front-matter 部分,看起来有点乱,可以参看下图说明更正一下。

![更正 front-matter 更正 front-matter]()

本地调试

安装 Elog

Elog,一个开放式跨端博客解决方案,可以随意组合写作平台(语雀/Notion/FlowUs)和博客平台(Hexo/Vitepress/Confluence/WordPress)等,是本次实现语雀云端写作的关键。

本着非必要不升级的原则,我使用的 Node.js 一直以来都是 v12.14.0,本次安装使用的 Elog 要求至少 14 版本的 Node.js,故而我升级 Node.js 到最新的 LTS 版本,即 18 版本。至于如何升级,如果是 Windows,就卸载然后安装,如果是 Linux,推荐使用 NVM 安装。

全局安装 @elog/cli:

1

| npm install -g @elog/cli

|

进入<博客构建目录>,执行以下命令进行初始化,会在根目录生成一份配置文件 elog.config.js 和本地调试用的环境变量文件 .elog.env。

将 .elog.env 加入 .gitignore,防止隐私数据提交:

1

2

3

4

5

6

7

8

| .DS_Store

Thumbs.db

db.json

*.log

public/

node_modules/

.deploy*/

+.elog.env

|

配置

编辑 .elog.env,配置语雀认证的环境变量:

1

2

3

4

5

6

7

| # 语雀(Token方式)

YUQUE_TOKEN=

# 语雀(帐号密码方式)

YUQUE_USERNAME=

YUQUE_PASSWORD=

YUQUE_LOGIN=

YUQUE_REPO=

|

Token 方式和帐号密码方式二选一,现在使用 Token 需要开会员,没有会员的就使用帐号密码方式。YUQUE_LOGIN 和 YUQUE_REPO 的获取方式见 elog 官方文档的关键信息获取。

再配置图床认证的环境变量,平台可在腾讯云、阿里云、又拍云、七牛云和 GitHub 中选择一个,详细配置方式见 elog 官方文档的关键信息获取。

编辑 elog.config.js,设置写作平台为 yuque-pwd(即帐号密码方式认证的语雀):

1

2

3

4

5

| ...

write: {

- platform: "yuque"

+ platform: "yuque-pwd",

...

|

配置本地部署方式:

1

2

3

4

5

6

7

8

9

10

11

12

13

| ...

deploy: {

platform: "local",

local: {

- outputDir: "./docs",

+ outputDir: "source/_posts",

filename: "title",

- format: "markdown",

+ format: "matter-markdown",

catalog: false,

formatExt: "",

},

...

|

开启图床,设置图床平台为腾讯云、阿里云、又拍云、七牛云或 GitHub:

1

2

3

4

5

6

7

| ...

image: {

- enable: false,

+ enable: true

- platform: "local",

+ platform: "<图床平台>",

...

|

最后配置图床,配置方式见 elog 官方文档的配置详情。

同步

如果日志显示同步成功,本地调试即成功。同时,注意到当前目录生成了一个缓存文件 elog.cache.json,其中是我们文章的原始数据。

持续集成

本地安装 @elog/cli:

为了防止 hexo 在生成博客源文件时报错,新建目录 cache,将 elog.cache.json 移动过去。

编辑 package.json,新建一个 NPM 命令用于同步:

1

2

3

4

5

6

7

8

9

| ...

"scripts": {

"build": "hexo generate",

"clean": "hexo clean",

"deploy": "hexo deploy",

"server": "hexo server",

+ "sync": "elog sync -a cache/elog.cache.json"

},

...

|

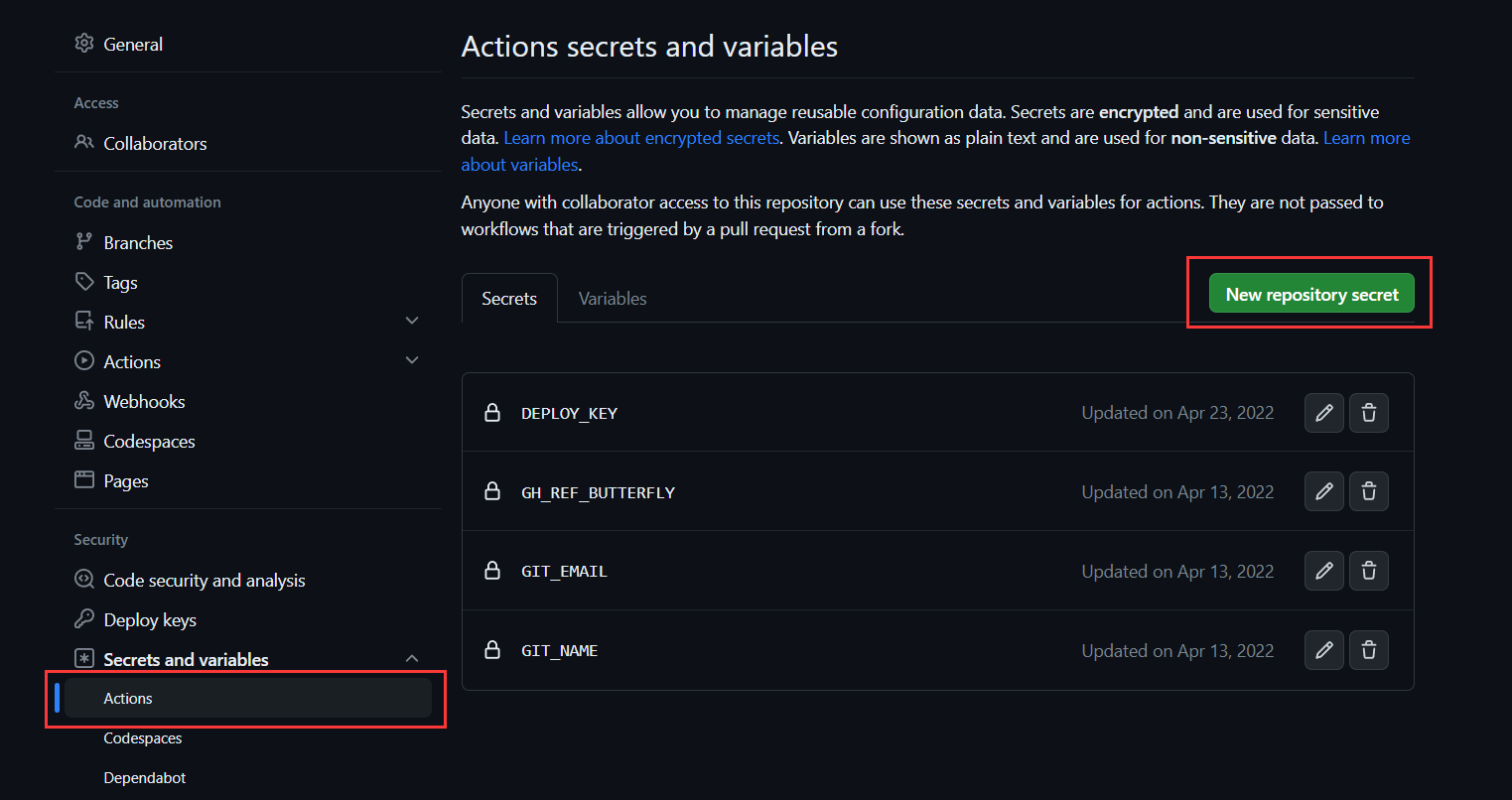

前往博客构建仓库添加密钥,如下图,添加 YUQUE_TOKEN(YUQUE_USERNAME、YUQUE_PASSWORD)、YUQUE_LOGIN、YUQUE_REPO 的值,再添加图床的密钥,如使用腾讯云作为图床平台,需添加 COS_SECRET_ID、COS_SECRET_KEY、COS_BUCKET、COS_REGION 和 COS_HOST 的值。

![添加密钥 添加密钥]()

编辑 GitHub Actions 工作流:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

| name: hexo-build

# 仓库分支main有推送时执行jobs下定义的任务

on:

push:

branches:

- main

# 设置时区为上海

env:

TZ: Asia/Shanghai

jobs:

# 定义名为blog-build的任务

blog-build:

# 定义运行的操作系统

runs-on: ubuntu-latest

# 定义步骤

steps:

# 签出仓库的默认分支,此处即为main,同时迁出子项目,此处即为主题仓库

- name: Checkout

uses: actions/checkout@v3

with:

submodules: true

# 安装别名为lts/Hydrogen的Node.js,即18版本,同时进行全局缓存

- - name: Install Node.js v12.14.0

+ - name: Install Node.js lts/Hydrogen

uses: actions/setup-node@v3

with:

- node-version: "12.14.0"

+ node-version: "lts/Hydrogen"

cache: "npm"

# 缓存文件夹node_modules并生成唯一码

- name: Cache dependencies

uses: actions/cache@v3

id: cache-dependencies

with:

path: node_modules

key: ${{runner.OS}}-${{hashFiles('**/package-lock.json')}}

# 如果唯一码比对不成功,重新安装依赖,成功则沿用缓存

- name: Install dependencies

if: steps.cache-dependencies.outputs.cache-hit != 'true'

run: npm ci

+ - name: Pull posts from Yuque

+ env:

+ YUQUE_TOKEN: ${{ secrets.YUQUE_TOKEN }}

+ YUQUE_LOGIN: ${{ secrets.YUQUE_LOGIN }}

+ YUQUE_REPO: ${{ secrets.YUQUE_REPO }}

+ COS_SECRET_ID: ${{ secrets.COS_SECRET_ID }}

+ COS_SECRET_KEY: ${{ secrets.COS_SECRET_KEY }}

+ COS_BUCKET: ${{ secrets.COS_BUCKET }}

+ COS_REGION: ${{ secrets.COS_REGION }}

+ COS_HOST: ${{ secrets.COS_HOST }}

+ run: |

+ # elog.cache.json被指定在子目录cache下,需要先创建,先判断有没有,没有才创建

+ [ ! -d "cache" ] && mkdir cache

+ npm run sync

- name: Setup private rsa key

env:

DEPLOY_KEY: ${{secrets.DEPLOY_KEY}}

run: |

mkdir -p ~/.ssh/

echo "$DEPLOY_KEY" > ~/.ssh/id_rsa

chmod 600 ~/.ssh/id_rsa

ssh-keyscan github.com >> ~/.ssh/known_hosts

ssh-keyscan gitee.com >> ~/.ssh/known_hosts

- name: Download and clone PUBLIC

run: |

# 下载自己的GitHub用户仓库,下载的文件为master.zip

curl -LsO https://github.com/ql-isaac/<自己的Github用户名>.github.io/archive/refs/heads/master.zip

# 解压

unzip master.zip -d .

# 重命名为public

mv <自己的Github用户名>.github.io-master/ public

# 克隆自己的GitHub用户仓库,重命令为.deploy_git

git clone git@github.com:ql-isaac/<自己的Github用户名>.github.io.git .deploy_git

- name: Download DB

uses: dawidd6/action-download-artifact@v2

continue-on-error: true # 构件最多只能保存90天,90天过后这一步会报错,设置成报错继续执行

with:

github_token: ${{secrets.GITHUB_TOKEN}}

name: "DB"

- name: Generate and deploy

run: |

git config --global user.name "${{secrets.GIT_NAME}}"

git config --global user.email "${{secrets.GIT_EMAIL}}"

npm run build && npm run deploy

- name: Upload DB for next workflow to download

uses: actions/upload-artifact@v3

with:

name: "DB"

path: db.json

retention-days: 90

+ - name: Upload Cache for next workflow to download

+ uses: actions/upload-artifact@v3

+ with:

+ name: "Cache"

+ path: cache

+ retention-days: 90

+ - name: Upload Posts for next workflow to download

+ uses: actions/upload-artifact@v3

+ with:

+ name: "Posts"

+ path: "source/_posts"

+ retention-days: 90

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

| name: hexo-build

on:

push:

branches:

- main

env:

TZ: Asia/Shanghai

jobs:

blog-build:

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v3

with:

submodules: true

- - name: Install Node.js v12.14.0

+ - name: Install Node.js lts/Hydrogen

uses: actions/setup-node@v3

with:

- node-version: "12.14.0"

+ node-version: "lts/Hydrogen"

cache: "npm"

- name: Cache dependencies

uses: actions/cache@v3

id: cache-dependencies

with:

path: node_modules

key: ${{runner.OS}}-${{hashFiles('**/package-lock.json')}}

- name: Install dependencies

if: steps.cache-dependencies.outputs.cache-hit != 'true'

run: npm ci

+ - name: Pull posts from Yuque

+ env:

+ YUQUE_TOKEN: ${{ secrets.YUQUE_TOKEN }}

+ YUQUE_LOGIN: ${{ secrets.YUQUE_LOGIN }}

+ YUQUE_REPO: ${{ secrets.YUQUE_REPO }}

+ COS_SECRET_ID: ${{ secrets.COS_SECRET_ID }}

+ COS_SECRET_KEY: ${{ secrets.COS_SECRET_KEY }}

+ COS_BUCKET: ${{ secrets.COS_BUCKET }}

+ COS_REGION: ${{ secrets.COS_REGION }}

+ COS_HOST: ${{ secrets.COS_HOST }}

+ run: |

+ [ ! -d "cache" ] && mkdir cache

+ npm run sync

- name: Setup private rsa key

env:

DEPLOY_KEY: ${{secrets.DEPLOY_KEY}}

run: |

mkdir -p ~/.ssh/

echo "$DEPLOY_KEY" > ~/.ssh/id_rsa

chmod 600 ~/.ssh/id_rsa

ssh-keyscan github.com >> ~/.ssh/known_hosts

ssh-keyscan gitee.com >> ~/.ssh/known_hosts

- name: Download and clone PUBLIC

run: |

curl -LsO https://github.com/ql-isaac/<自己的Github用户名>.github.io/archive/refs/heads/master.zip

unzip master.zip -d .

mv <自己的Github用户名>.github.io-master/ public

git clone git@github.com:ql-isaac/<自己的Github用户名>.github.io.git .deploy_git

- name: Download DB

uses: dawidd6/action-download-artifact@v2

continue-on-error: true

with:

github_token: ${{secrets.GITHUB_TOKEN}}

name: "DB"

- name: Generate and deploy

run: |

git config --global user.name "${{secrets.GIT_NAME}}"

git config --global user.email "${{secrets.GIT_EMAIL}}"

npm run build && npm run deploy

- name: Upload DB for next workflow to download

uses: actions/upload-artifact@v3

with:

name: "DB"

path: db.json

retention-days: 90

+ - name: Upload Cache for next workflow to use

+ uses: actions/upload-artifact@v3

+ with:

+ name: "Cache"

+ path: cache

+ retention-days: 90

+ - name: Upload Posts for next workflow to use

+ uses: actions/upload-artifact@v3

+ with:

+ name: "Posts"

+ path: "source/_posts"

+ retention-days: 90

|

提交并推送:

1

2

3

| git add .

git commit -m ":green_heart: 使用elog实现语雀云端写作"

git push

|

再次编辑 GitHub Actions 工作流:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

| name: hexo-build

on:

# 将执行jobs下定义的任务的充分条件改为有类型为publish的外部事件触发

- push:

- branches:

- - main

+ repository_dispatch:

+ types:

+ - publish

# 设置时区为上海

env:

TZ: Asia/Shanghai

jobs:

# 定义名为blog-build的任务

blog-build:

# 定义运行的操作系统

runs-on: ubuntu-latest

# 定义步骤

steps:

# 签出仓库的默认分支,此处即为main,同时迁出子项目,此处即为主题仓库

- name: Checkout

uses: actions/checkout@v3

with:

submodules: true

# 安装别名为lts/Hydrogen的Node.js,即18版本,同时进行全局缓存

- name: Install Node.js lts/Hydrogen

uses: actions/setup-node@v3

with:

node-version: "lts/Hydrogen"

cache: "npm"

# 缓存文件夹node_modules并生成唯一码

- name: Cache dependencies

uses: actions/cache@v3

id: cache-dependencies

with:

path: node_modules

key: ${{runner.OS}}-${{hashFiles('**/package-lock.json')}}

# 如果唯一码比对不成功,重新安装依赖,成功则沿用缓存

- name: Install dependencies

if: steps.cache-dependencies.outputs.cache-hit != 'true'

run: npm ci

+ - name: Download Posts from the last workflow

+ uses: dawidd6/action-download-artifact@v2

+ continue-on-error: true # 构件最多只能保存90天,90天过后这一步会报错,设置成报错继续执行

+ with:

+ github_token: ${{secrets.GITHUB_TOKEN}}

+ name: "Posts"

+ path: "source/_posts"

+ - name: Download Cache from the last workflow

+ continue-on-error: true

+ uses: dawidd6/action-download-artifact@v2

+ continue-on-error: true

+ with:

+ github_token: ${{secrets.GITHUB_TOKEN}}

+ name: "Cache"

+ path: "cache"

- name: Pull posts from Yuque

env:

YUQUE_TOKEN: ${{ secrets.YUQUE_TOKEN }}

YUQUE_LOGIN: ${{ secrets.YUQUE_LOGIN }}

YUQUE_REPO: ${{ secrets.YUQUE_REPO }}

COS_SECRET_ID: ${{ secrets.COS_SECRET_ID }}

COS_SECRET_KEY: ${{ secrets.COS_SECRET_KEY }}

COS_BUCKET: ${{ secrets.COS_BUCKET }}

COS_REGION: ${{ secrets.COS_REGION }}

COS_HOST: ${{ secrets.COS_HOST }}

run: |

[ ! -d "cache" ] && mkdir cache

npm run sync

- name: Setup private rsa key

env:

DEPLOY_KEY: ${{secrets.DEPLOY_KEY}}

run: |

mkdir -p ~/.ssh/

echo "$DEPLOY_KEY" > ~/.ssh/id_rsa

chmod 600 ~/.ssh/id_rsa

ssh-keyscan github.com >> ~/.ssh/known_hosts

ssh-keyscan gitee.com >> ~/.ssh/known_hosts

- name: Download and clone PUBLIC

run: |

# 下载自己的GitHub用户仓库,下载的文件为master.zip

curl -LsO https://github.com/ql-isaac/<自己的Github用户名>.github.io/archive/refs/heads/master.zip

# 解压

unzip master.zip -d .

# 重命名为public

mv <自己的Github用户名>.github.io-master/ public

# 克隆自己的GitHub用户仓库,重命令为.deploy_git

git clone git@github.com:ql-isaac/<自己的Github用户名>.github.io.git .deploy_git

- name: Download DB

uses: dawidd6/action-download-artifact@v2

continue-on-error: true # 构件最多只能保存90天,90天过后这一步会报错,设置成报错继续执行

with:

github_token: ${{secrets.GITHUB_TOKEN}}

name: "DB"

- name: Generate and deploy

run: |

git config --global user.name "${{secrets.GIT_NAME}}"

git config --global user.email "${{secrets.GIT_EMAIL}}"

npm run build && npm run deploy

- name: Upload DB for next workflow to download

uses: actions/upload-artifact@v3

with:

name: "DB"

path: db.json

retention-days: 90

- name: Upload Cache for next workflow to download

uses: actions/upload-artifact@v3

with:

name: "Cache"

path: cache

retention-days: 90

- name: Upload Posts for next workflow to download

uses: actions/upload-artifact@v3

with:

name: "Posts"

path: "source/_posts"

retention-days: 90

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

| name: hexo-build

on:

- push:

- branches:

- - main

+ repository_dispatch:

+ types:

+ - publish

env:

TZ: Asia/Shanghai

jobs:

blog-build:

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v3

with:

submodules: true

- name: Install Node.js lts/Hydrogen

uses: actions/setup-node@v3

with:

node-version: "lts/Hydrogen"

cache: "npm"

- name: Cache dependencies

uses: actions/cache@v3

id: cache-dependencies

with:

path: node_modules

key: ${{runner.OS}}-${{hashFiles('**/package-lock.json')}}

- name: Install dependencies

if: steps.cache-dependencies.outputs.cache-hit != 'true'

run: npm ci

+ - name: Download Posts from the last workflow

+ uses: dawidd6/action-download-artifact@v2

+ continue-on-error: true

+ with:

+ github_token: ${{secrets.GITHUB_TOKEN}}

+ name: "Posts"

+ path: "source/_posts"

+ - name: Download Cache from the last workflow

+ uses: dawidd6/action-download-artifact@v2

+ continue-on-error: true

+ with:

+ github_token: ${{secrets.GITHUB_TOKEN}}

+ name: "Cache"

+ path: "cache"

- name: Pull posts from Yuque

env:

YUQUE_TOKEN: ${{ secrets.YUQUE_TOKEN }}

YUQUE_LOGIN: ${{ secrets.YUQUE_LOGIN }}

YUQUE_REPO: ${{ secrets.YUQUE_REPO }}

COS_SECRET_ID: ${{ secrets.COS_SECRET_ID }}

COS_SECRET_KEY: ${{ secrets.COS_SECRET_KEY }}

COS_BUCKET: ${{ secrets.COS_BUCKET }}

COS_REGION: ${{ secrets.COS_REGION }}

COS_HOST: ${{ secrets.COS_HOST }}

run: |

[ ! -d "cache" ] && mkdir cache

npm run sync

- name: Setup private rsa key

env:

DEPLOY_KEY: ${{secrets.DEPLOY_KEY}}

run: |

mkdir -p ~/.ssh/

echo "$DEPLOY_KEY" > ~/.ssh/id_rsa

chmod 600 ~/.ssh/id_rsa

ssh-keyscan github.com >> ~/.ssh/known_hosts

ssh-keyscan gitee.com >> ~/.ssh/known_hosts

- name: Download and clone PUBLIC

run: |

curl -LsO https://github.com/ql-isaac/<自己的Github用户名>.github.io/archive/refs/heads/master.zip

unzip master.zip -d .

mv <自己的Github用户名>.github.io-master/ public

git clone git@github.com:ql-isaac/<自己的Github用户名>.github.io.git .deploy_git

- name: Download DB

uses: dawidd6/action-download-artifact@v2

continue-on-error: true

with:

github_token: ${{secrets.GITHUB_TOKEN}}

name: "DB"

- name: Generate and deploy

run: |

git config --global user.name "${{secrets.GIT_NAME}}"

git config --global user.email "${{secrets.GIT_EMAIL}}"

npm run build && npm run deploy

- name: Upload DB for next workflow to download

uses: actions/upload-artifact@v3

with:

name: "DB"

path: db.json

retention-days: 90

- name: Upload Cache for next workflow to download

uses: actions/upload-artifact@v3

with:

name: "Cache"

path: cache

retention-days: 90

- name: Upload Posts for next workflow to download

uses: actions/upload-artifact@v3

with:

name: "Posts"

path: "source/_posts"

retention-days: 90

|

将 source 下 _posts 和 cache 下的 elog.cache.json 加入 .gitignore 并删除掉,这两个地方的文件已经不需要。

最终提交并推送:

1

2

3

| git add .

git commit -m ":green_heart: 使用elog实现语雀云端写作(终)"

git push

|

这样,在语雀上写好博文并更新,只需要地址栏输入以下地址(外部事件触发 API),即可触发持续集成,实现云端写作。

1

| https://serverless-api-elog.vercel.app/api/github?user=<自己GitHub用户名>&repo=<博客构建仓库名>&event_type=publish&token=<自己生成的Token>

|

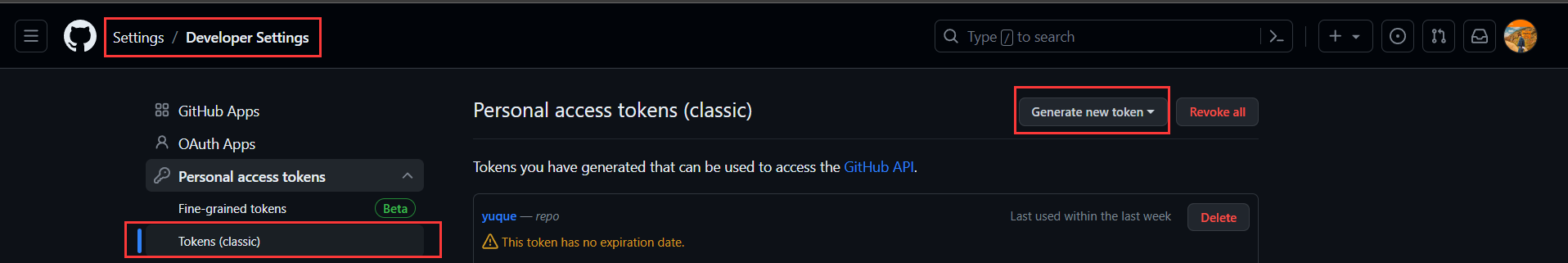

生成 Token 的方式(勾选 repo 的权限):

![image.png]()

以上 API 是 elog 官方在 Vercel 上搭建的,使用的 Vercel 默认域名,会经常被墙,我们可以自己搭建一个并且使用上自己的域名(未备案也行,因为 Vercel 是国外服务)。

fork serverless-api,登录 Vercel,新建,导入刚刚 fork 的项目,部署。到域名设置那里,添加自己的域名,如 publish.xxx.xx,再到域名供应商那里添加一下 cname 解析,最后回到域名设置那里,确认解析生效,这样,将以上地址中域名替换为自己的域名得到自己的 API。